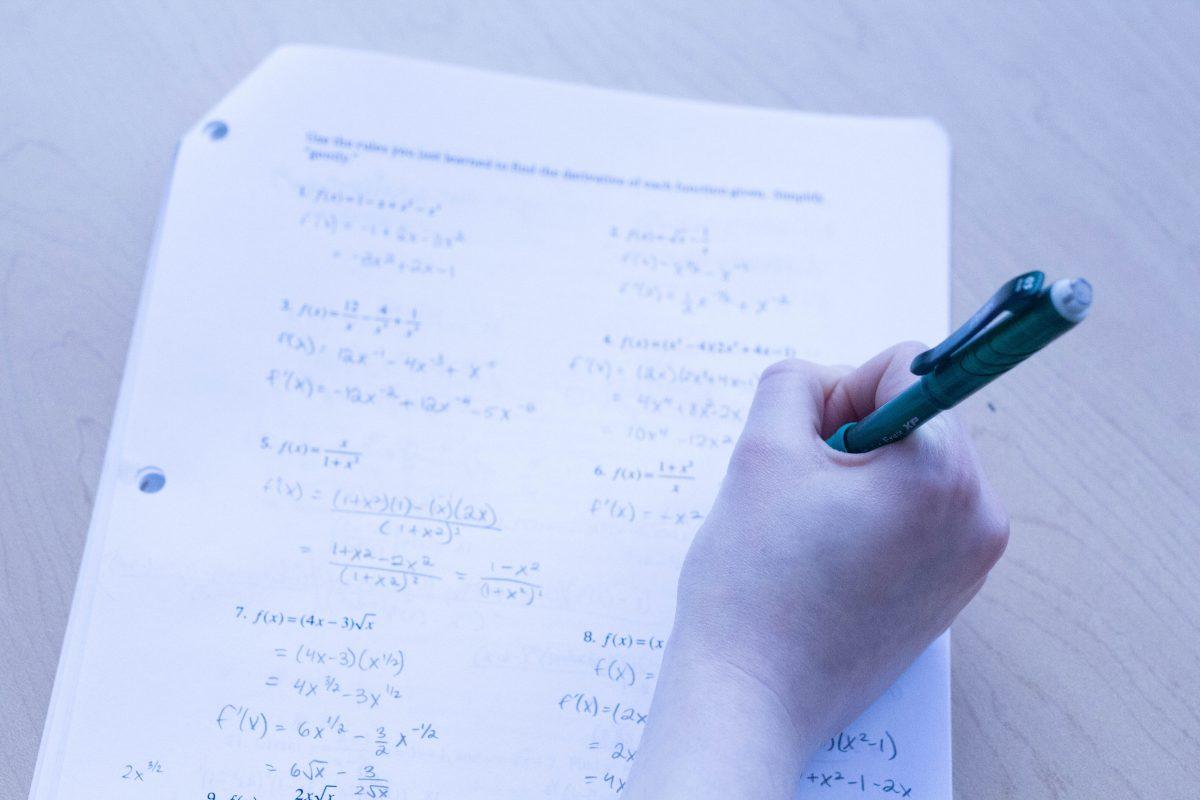

The capabilities of OpenAI’s new o3 model are terrifying. o3 is an unreleased large language model (the same type of model utilized by ChatGPT) that is specifically designed to simulate human reasoning. This updated architecture allows o3 to complete incredibly difficult tasks; according to OpenAI, o3 outperforms 99.8% of programmers on CodeForces and scores better than PhD experts on math and science exams.

OpenAI CEO Sam Altman’s recent comments about Artificial General Intelligence (AGI)—a term used to describe an AI agent that can do every intellectual task a human can—raise further concern. In a blog published following the announcement of o3, Altman wrote, “We are now confident we know how to build AGI[…]We believe that, in 2025, we may see the first AI agents ‘join the workforce’ and materially change the output of companies.” Although shareholders might rejoice at these remarks, most individuals likely feel a sense of existential dread upon learning that all jobs, both low and high-skill, can soon be entirely automated. Does o3 signal the impending fall of humanity at the hands of AGI?

“Does o3 signal the impending fall of humanity at the hands of AGI?”

Although o3 does achieve state-of-the-art results, people should be skeptical of lofty claims made by higher-ups like Altman. The hype surrounding new innovation bolsters the funding of tech companies, meaning tech executives often exaggerate the implications of their latest breakthroughs. Elon Musk, for one, has promised fully autonomous cars every year since 2015 and has fallen through on his promise every single time. Statements made by Altman should not be viewed as scientific claims based on new empirical data but as the claims of a businessman who is paid to attract investment into his company.

In the case of AGI, Altman misrepresents the data in two key ways. First, he downplays the cost associated with running an AGI. According to ARC Prize, it costs upwards of $500,000 for o3 to complete ARC-AGI (a logic test consisting of 100 simple puzzles), while most people can finish the test in just a few hours. This staggering price stems from o3’s use of an algorithm called Chain-of-Thought (CoT) Reasoning. Much like a human, CoT models solve problems by breaking them down into smaller, logical steps. This method prevents logical contradictions and mistakes, but it also means that smarter CoT models, which break problems down into more steps, require more computational resources and are incredibly expensive to operate.

Even if we are close to achieving AGI, the price tag of o3 suggests that we are many innovations away from achieving cost-effective AGI. For decades after its invention, AGI will likely act more akin to an oracle—demanding millions of dollars in exchange for answers to our most pressing questions—rather than a practical agent capable of replacing human workers.

“Even if we are close to achieving AGI, the price tag of o3 suggests that we are many innovations away from achieving cost-effective AGI.”

Altman also misrepresents AGI progress by downplaying the law of diminishing returns (which states that as more resources are invested in a given area of AI advancement, incremental gains in results and efficiency will decrease). The AI boom in recent years has been achieved with a simple paradigm: make models larger. This approach has led to significant progress, but as evidenced by the GPT series, has become less effective over time. The uptick in intelligence from GPT-2 to 3 was massive, while the difference between GPT-3.5 and 4 was negligible. Simply increasing the size of models saw “diminishing returns” in model competency, and the GPT series eventually stopped improving with this paradigm.

OpenAI’s new CoT series (the o1 and o3 models) leverages a different paradigm: give models more time and computing resources to “think” about a problem. Using this strategy, OpenAI created a substantial increase in intelligence from o1 to o3 in just a few months. If you follow this trajectory linearly, it does appear that Altman will achieve AGI in 2025. However, the progress curve of CoT models will not be linear; just like the GPT series, o4 will see far less incremental improvement than o3, o5 will be nearly identical to o4, and so on.

This prediction is supported by data. According to ARC Prize, to increase ARC-AGI performance from 76% to 88%, o3 demands 172 times more computing power. The cost of CoT models scales exponentially with performance, meaning that the law of diminishing returns is already in full effect. o3 is nearing the limit of what is possible with CoT models, and further paradigm shifts will soon be required in order for AGI development to continue.

“o3 is nearing the limit of what is possible with CoT models, and further paradigm shifts will soon be required in order for AGI development to continue.”

It is inevitable that humanity will one day build cost-effective AGI, but the fact that this invention is a long way away should be a comfort to all. Altman’s recent comments are only frightening because of the shockingly short timeline he forecasts—AGI itself is not scary, but the sudden development of AGI under a for-profit company, with no governmental regulatory measures yet in place, certainly is scary. A longer timeline for the creation of AGI means that we can prepare for its ethical implementation into society.